Word Embeddings Demo

RPubs - Word Embeddings Demo GitHub Repo - Word2Vec Embedding Projector in Tensorflow

This R Markdown report, published to RPubs, demonstrates the use of several machine learning and natural language processing techniques surrounding the concept of word embeddings.

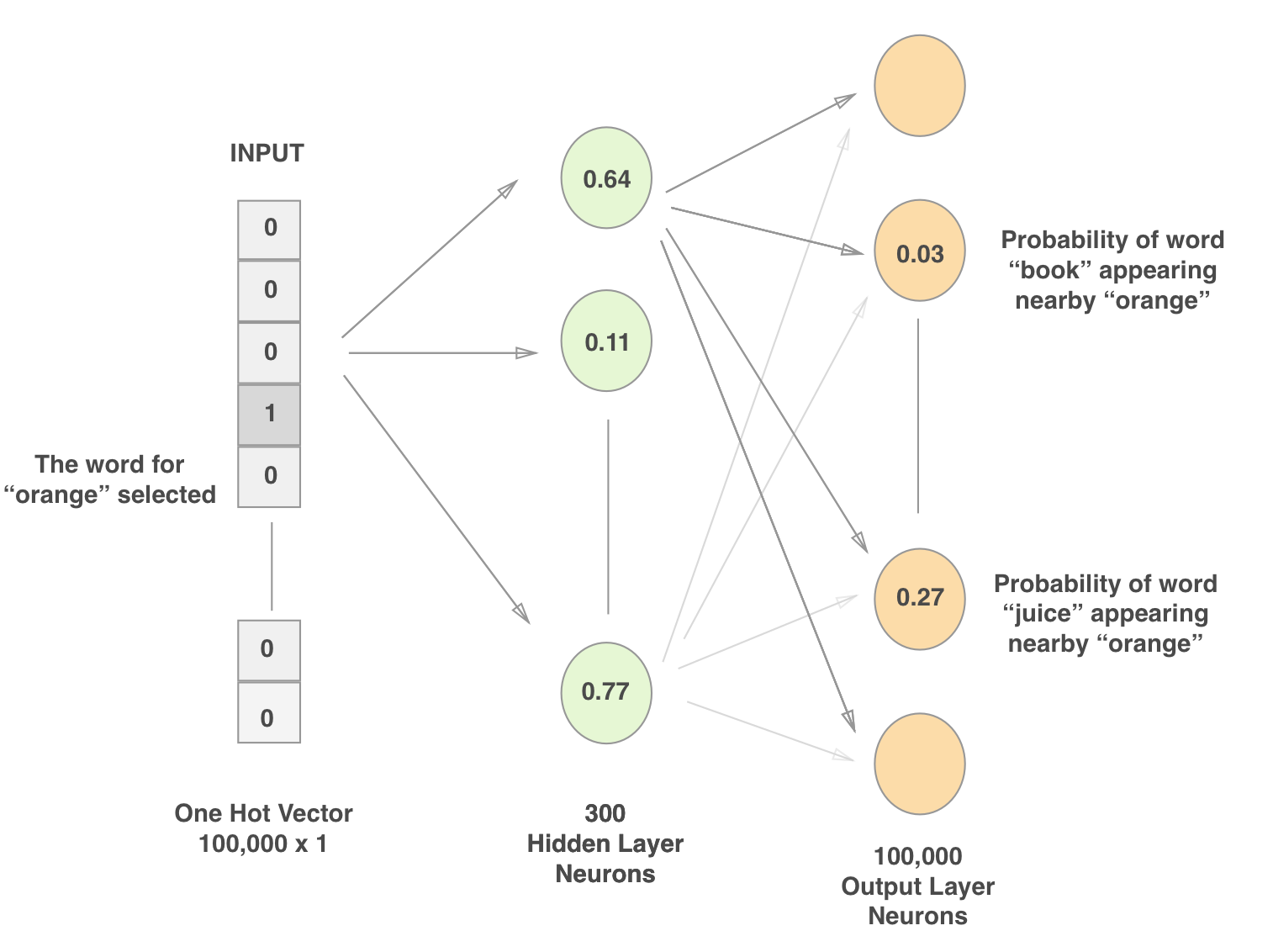

Word embeddings are a form of language modeling using natural language processing and machine learning techniques to map words from a selected vocabulary to a vector of numbers. For example:

“DRUG” = [-3.2030731e-02, 2.7105689e-01, -4.9149340e-01, 6.1396444e-01, … ]

By representing words with vectors, we can map words to a point in a high dimensional geometric space. The goal of word embedding models are to place words in this geometric space such that words with high semantic similarity are close together. How these vectors are constructed depend on the models or algorithms used. Word2Vec and FastText algorithms are the focus of this demo.

Sample Analyses

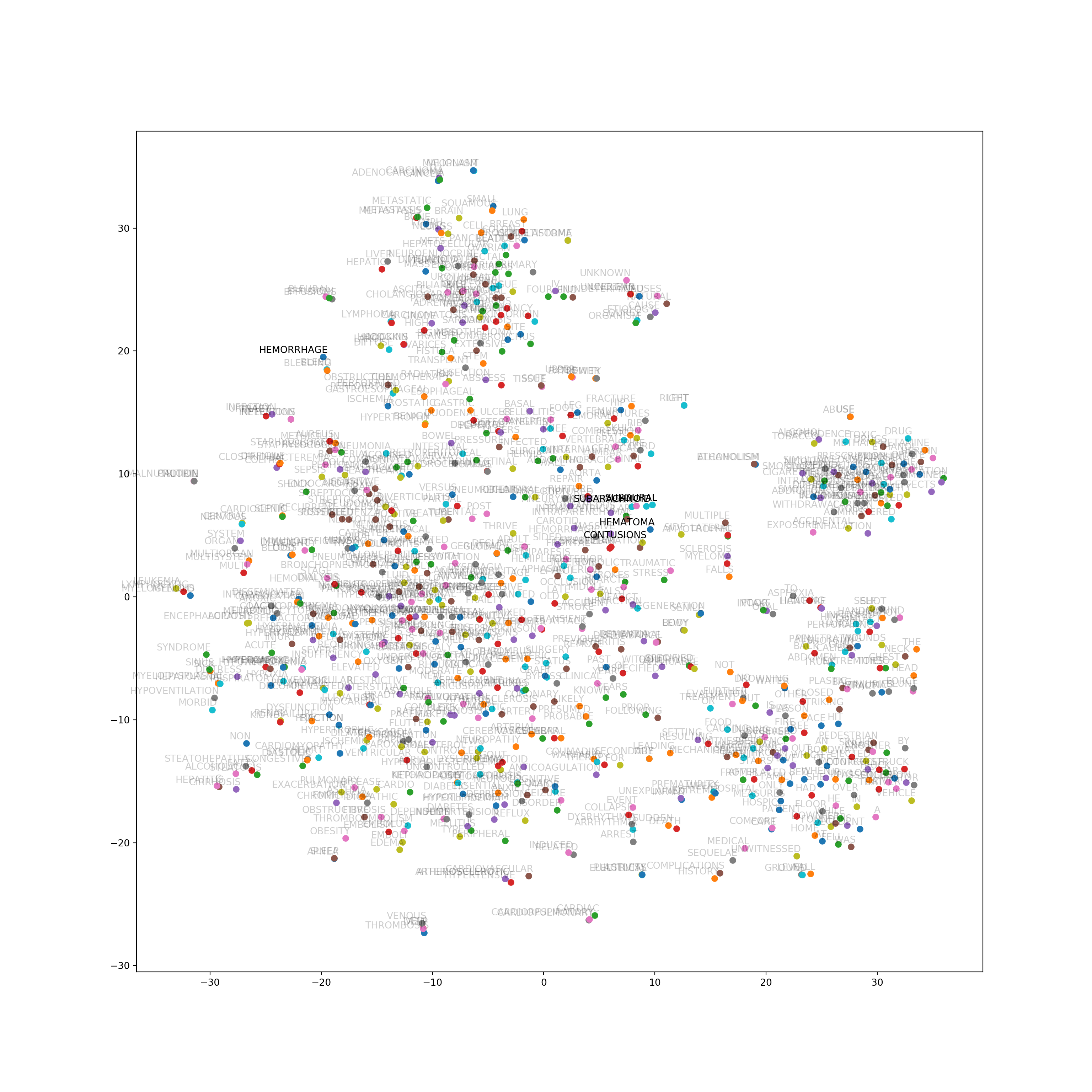

- Examining the quality of embeddings produced by Word2Vec and FastText algorithms

- TSNE dimensionality reduction to visualize high dimensional vectors in 2D

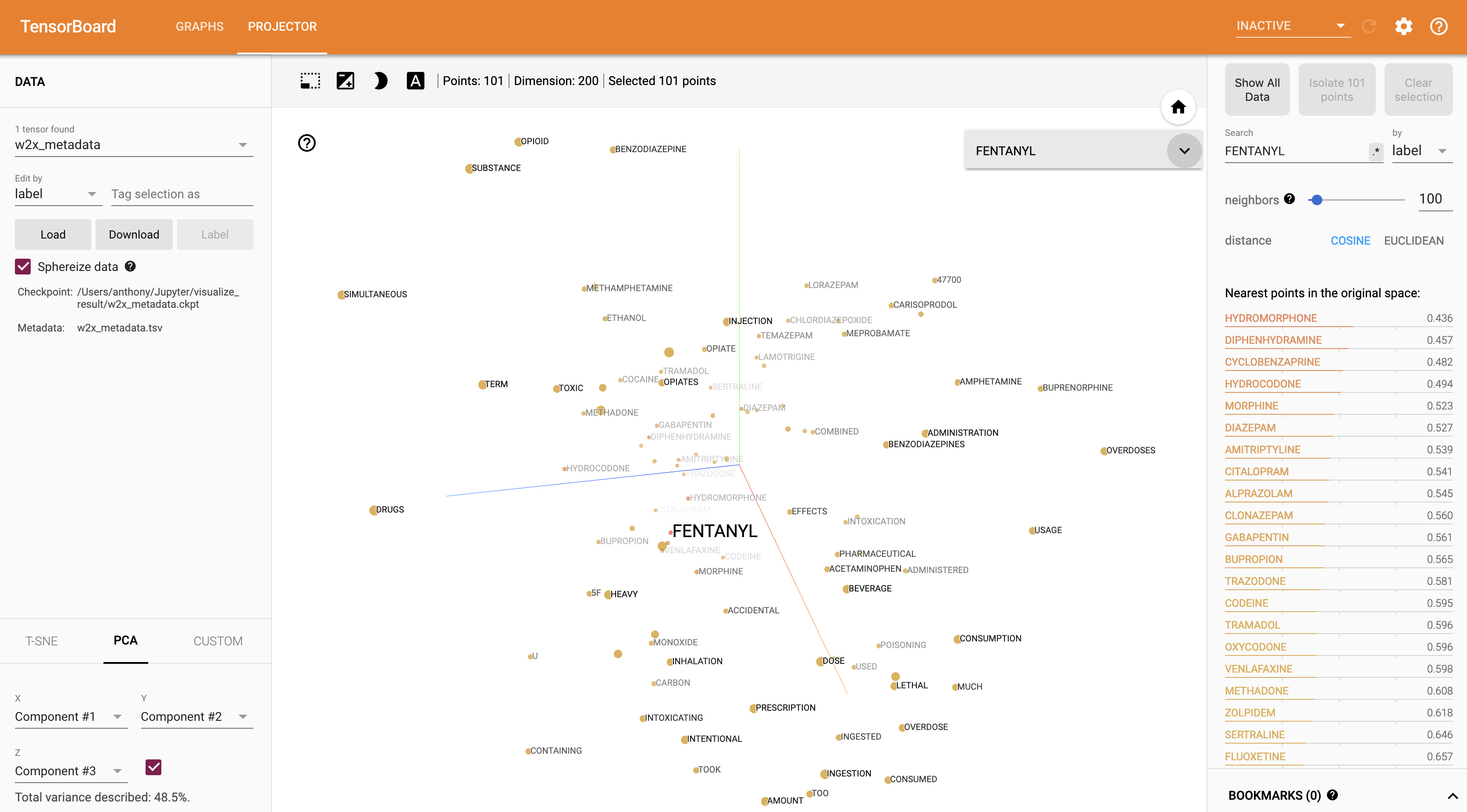

- Exploring Word2Vec embeddings using Tensorflow Embedding Projector