Face Detection using Open CV

Choice of Dataset

Ring Doorbell videos

- Video surveillance in the event of motion detection or doorbell ring

- MP4 videos range in length from 15-30 seconds and run at 15 frames per second

- Daytime and night-vision modes which record in color or grayscale

Goal

Individuals are recognized largely by their facial features, therefore it is desirable to detect and isolate faces within a video. Face detection is import for the purposes of video surveillance as the goal is to extract semantic information such as ‘individual on my doorstep at this timestamp’.

Techniques

Techniques from multimedia data mining will be used within the project for preprocessing, classification/detection, and segmentation (extracting objects of interest from an image).

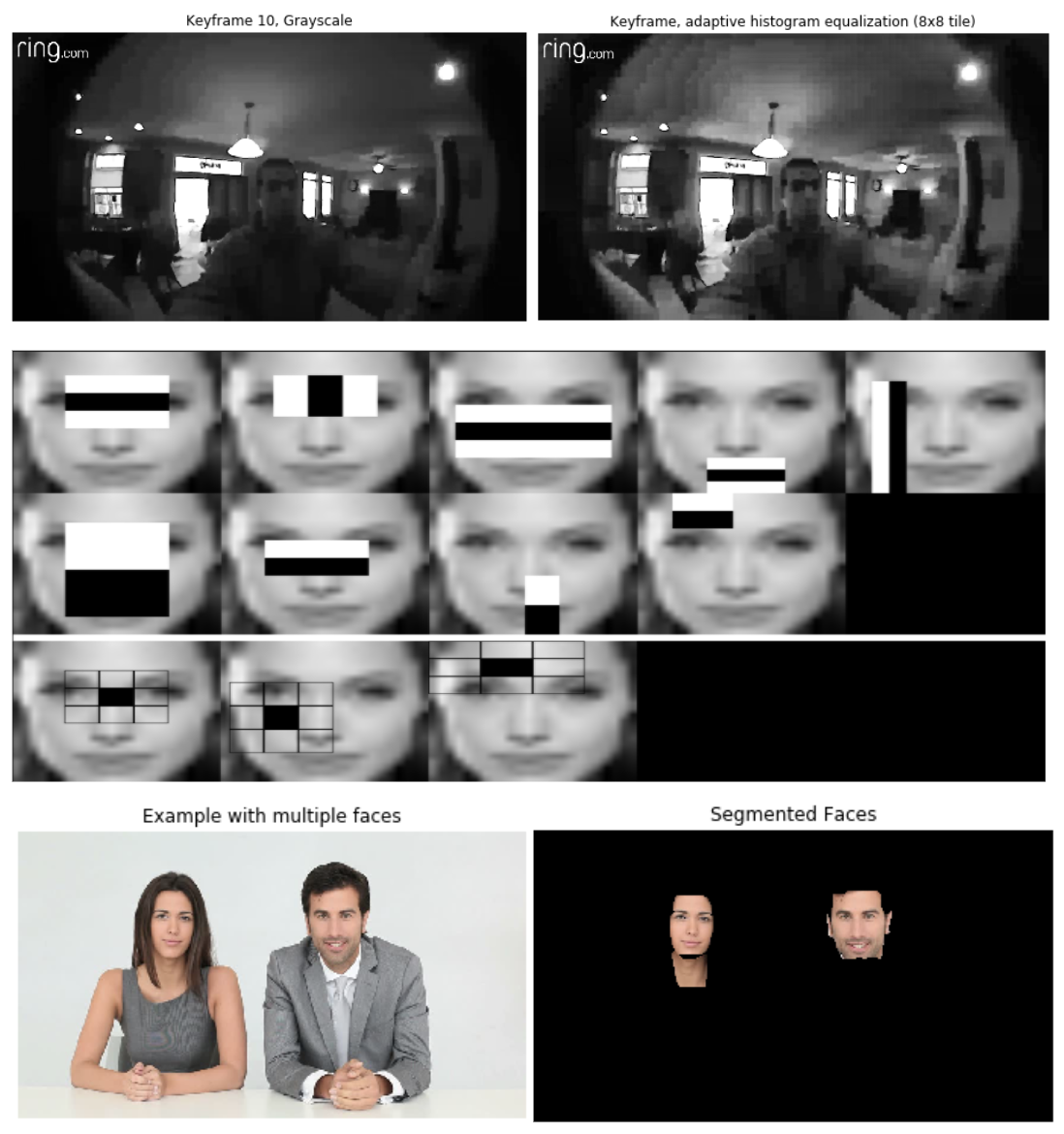

Given that the model of ring doorbell used in this project had variations in quality and a grainy quality, I used several types of pre-processing to see what would work best to bring features of interest to the forefront. This included the use of Gaussian or median blur for denoising, to reduce the amount of pixelation in the images; and locally adaptive histogram equalization, which adjusts the contrast within regions of an image, which can be important when regions of the video are susceptible to glare or poor lighting.

Haar feature-based cascade classifiers are pre-trained models available in the Open CV package which may be used to detect faces at various angles (frontal, angled, sideways/profile). This is useful for detecting regions within the image with a potential face and returning a bounding box.

Once the face is detected, it is desirable to segment/extract the face from the background. This is done using the GrabCut algorithm. GrabCut typically works best when accompanied with interactive methods where rectangles or polygons are roughly drawn around the object of interest, so it is expected that this would work well given a bounding box.

High Level Algorithm

- Create VideoCapture object and read in keyframes from source video

- For each keyframe:

- Convert to grayscale (required for equalization)

- Apply CLAHE (8x8 tile) to enhance contrast and edges

- Run face detection using frontal face classifier to detect all faces, returning bounding boxes

- For each bounding box, perform GrabCut segmentation (initializing with bounding box) to obtain a mask and add masks together to obtain a mask that isolates all faces in frame

- Combine variations of image (i.e. original, preprocessed, face bounding boxes, isolated faces) into frame with 4 panel display

- Write these frames to a video output file

Demo

Limitations/Considerations

This method was tested against a number of ring doorbell videos using variation in lighting and movement. Generally, this method works well when lighting conditions are favorable, movement is relatively slow, and the face is unobstructed.

The current model of the Ring Doorbell has large variations in video quality depending on whether the camera was running in daytime or nightime modes. The videos in this model were relatively poor clarity compared to high resolution cameras, and there were high amounts of noise that proved troublesome for pre-processing.

I found that the placement of the doorbell also made a significant difference with respect to the HAAR classifiers. They generally worked well when taking the face from straight on. However, with our current home, individuals approach the camera initially from lower ground down a set of steps and once they reach the door are over the camera at a significant angle. There is generally a very short window of time where the faces are at an optimal distance and angle. Changing the height or angle in which the doorbell is mounted could improve performance.

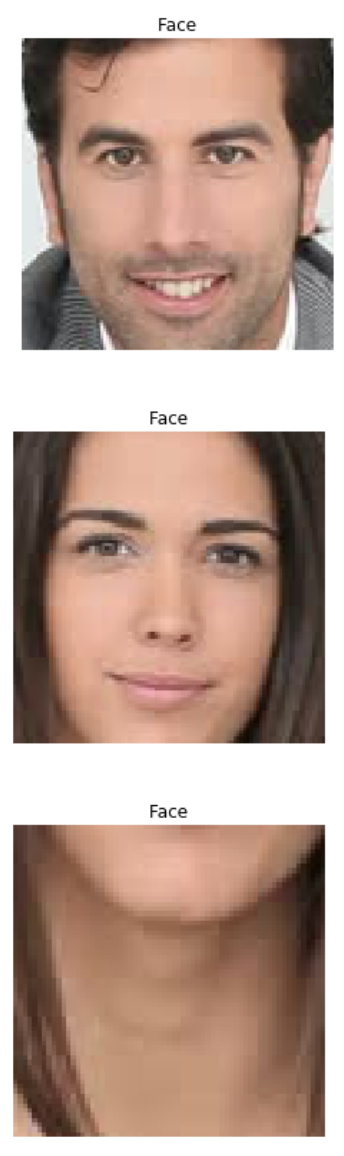

Additionally, the HAAR classifiers chosen provided mixed results with some false positives appearing. For example, the test image with multiple faces picked up not only the faces of the two individuals, but the women’s neck. Additionally, in most cases faces in fair lighting and good visibility were detected, but there were a number of false positives that were detected potentially as a result of poor picture quality or artifacts. To reduce/remove these anomalies, I would recommend doing some temporal analyses to ensure that faces occur across a minimum set of frames before truly being detected.

Future Work

- Additional preprocessing steps to account for disparity in lighting, movement, or angle

- Reducing false positives using a combination of classifiers (e.g. a frontal face should contain 2 eyes, a face is part of or adjacent to a body)

- Feature matching extracted faces to individual headshots

- Produce data on individuals identified at given timestamps that can be queried